What is Screen Scraping? Definition & Use Cases

Screen scraping is a valuable technique for extracting data from websites and applications when structured access methods, such as APIs, are unavailable. It enables businesses and developers to gather information for market research, automation, and system integration. Unlike traditional web scraping, which directly extracts structured data from HTML, screen scraping retrieves content from a website’s graphical interface, making it useful for capturing dynamic or visually rendered data. When combined with proxies, screen scraping becomes even more effective by bypassing IP restrictions and anti-bot measures, ensuring uninterrupted web data extraction and collection.

What is screen scraping?

Screen scraping is a method of extracting data directly from a website’s graphical user interface (GUI). It captures the content as it appears visually on the screen rather than accessing structured data through APIs or web scraping techniques. This makes it particularly useful in scenarios where the data is not available in a structured format, such as when information is embedded in dynamically generated elements, images, or interactive components.

The origins of screen scraping date back to early computing, when developers searched for a way to extract data from legacy systems that lacked database connectivity or API support. Initially, screen scraping was used to retrieve text-based outputs from mainframes and terminal-based applications, allowing businesses to integrate older systems with modern software solutions. Over time, as web technologies evolved, so did screen scraping methods. Today, sophisticated automation tools and artificial intelligence-driven solutions enable screen scraping to extract content from complex, JavaScript-heavy websites, including single-page applications (SPAs) and dynamic web interfaces.

Common use cases of screen scraping

While screen scraping shares similarities with general web scraping, its ability to capture information directly from rendered displays (rather than raw HTML or structured data feeds) unlocks possibilities that typical web-based methods don’t cover. Below are some real-world examples where screen scraping delivers unique value:

Extracting data from legacy or proprietary software

Many companies still rely on older applications without modern APIs or export functions. Screen scraping tools can read information right off the interface, making it possible to migrate data, create backups, or build integrations with newer platforms, without needing direct database access.

Automating tasks in desktop or terminal-based systems

In situations where processes happen inside a desktop GUI or command-line environment, screen scraping can replicate user interactions. This is especially helpful for automating repetitive tasks in customer service software, financial terminals, or ERP solutions that offer no programmatic access to underlying data.

Testing and quality assurance for non-web applications

Some QA teams use screen scraping to validate that on-screen elements match expected outputs in client-side or thick-client applications. By comparing what’s displayed in the UI to known baselines, testers can automate functional checks without digging into the software’s core logic.

Capturing information from kiosk or point-of-sale (POS) interfaces

Self-service kiosks, POS terminals, and other specialized hardware often lock down internal data paths. Screen scraping can read totals, product details, or transaction logs directly from the screen, allowing businesses to track sales data or monitor system health without modifying proprietary firmware.

Real-time monitoring of internal dashboards or analytics tools

Some organizations rely on internal dashboards that update in real time but don’t offer a convenient “export” or “API” button. Screen scraping can periodically capture the display and parse essential metrics, such as real-time inventory levels or production rates. It’s perfect for quick analysis or alerts without having to rebuild the software’s reporting features.

Because screen scraping can adapt to almost any visual interface, it extends the reach of data collection well beyond traditional web-based methods.

How does screen scraping work?

Modern screen scraping tools use advanced browser automation frameworks to interact with web pages in the same way a human user would. These tools can navigate through different sections of a website, simulate user actions like scrolling and clicking, and capture data that would otherwise be inaccessible through standard HTML parsing. Additionally, Optical Character Recognition (OCR) technology allows screen scrapers to extract text from images, scanned documents, or CAPTCHA-protected content, further expanding its applications.

The first step in screen scraping is accessing the target page. This can be done manually by navigating to the site or, more commonly, through automation using tools like Selenium, Puppeteer, or Playwright. These browser automation frameworks allow scripts to load web pages, interact with elements, and simulate user behaviors such as scrolling, clicking, or filling out forms.

Once the page loads, the next step is capturing the displayed data. If the content is available in plain text or standard HTML, it can be extracted directly. However, modern websites often use JavaScript frameworks to render elements dynamically, meaning that the data is not immediately present in the page source. In such cases, screen scraping tools rely on browser automation to execute scripts, wait for elements to load, and capture the visible content. If the data is embedded in non-text formats, such as images or PDFs, Optical Character Recognition (OCR) technology is used to convert visual data into machine-readable text.

After capturing the necessary data, the extracted content must be processed and structured. Raw scraped data can be messy, often containing unwanted elements such as advertisements, navigation menus, or formatting inconsistencies. Cleaning the data involves filtering out irrelevant content, standardizing formats, and extracting only the relevant information. Once refined, the data is structured into usable formats such as JSON, CSV, or databases, making it accessible for analysis, reporting, or integration into other applications.

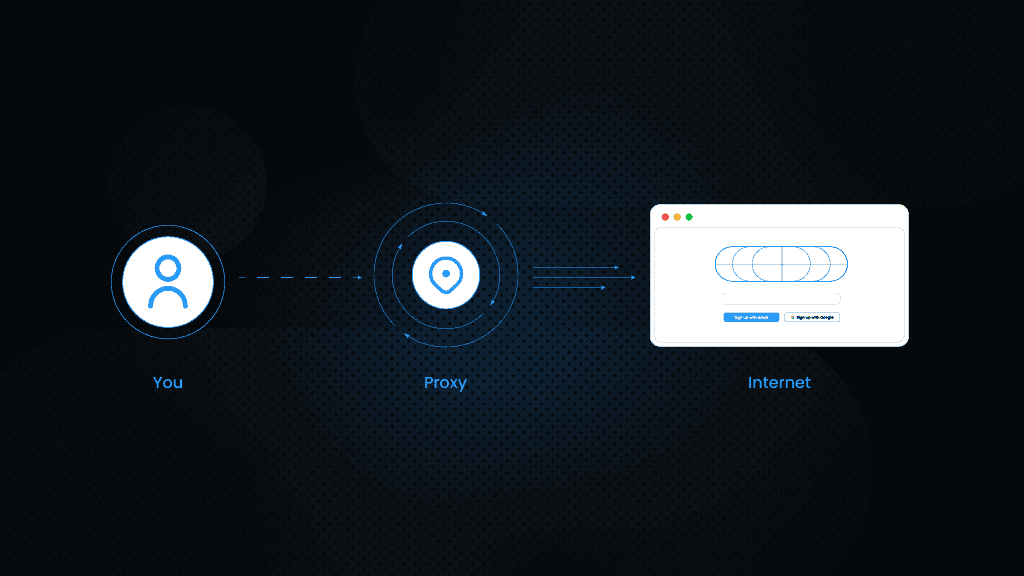

Throughout the process, screen scrapers must also navigate anti-bot mechanisms, CAPTCHAs, and IP blocking, which many websites implement to prevent automated data extraction. Proxies, rotating IPs, and CAPTCHA-solving services can help mitigate these restrictions by allowing screen scraping to run efficiently without frequent interruptions.

Tools and techniques for screen scraping

Screen scraping relies on various tools to automate interactions, extract data, and process content efficiently. These tools can be categorized into automation frameworks, text extraction technologies, and no-code solutions.

1. Automation and web interaction tools

- Selenium, Puppeteer, Playwright – Browser automation frameworks that allow scripts to interact with web pages, mimicking user actions like clicking, scrolling, and form submissions.

- Headless Browsers – Web browsers without a graphical interface, used to render JavaScript-heavy sites efficiently.

2. Data extraction and processing

- Tesseract OCR – Optical Character Recognition (OCR) engine used to extract text from images, making it essential for screen scraping non-HTML content.

- Regular Expressions (Regex) – A pattern-matching technique for identifying and extracting specific text within unstructured content.

3. No-code and low-code solutions

- ParseHub, Octoparse – User-friendly no-code tools that allow users to extract web data visually without writing code.

- Apify – A cloud-based scraping platform that provides automation and ready-to-use scraping solutions.

These tools enable both developers and non-technical users to perform screen scraping effectively, depending on your needs and technical proficiency.

The role of automation and bots in screen scraping

Automation is key in screen scraping to handle large-scale data extraction efficiently. Bots make the screen scraping process faster, scalable, and more reliable. They can:

- Simulate user actions such as clicking, scrolling, filling forms, and even interacting with dropdown menus or pop-ups.

- Capture dynamic content loaded via JavaScript, AJAX requests, and single-page applications (SPA), ensuring that data hidden behind user interactions is extracted.

- Repeatedly extract updated data over time by scheduling periodic scrapes, making it useful for real-time price tracking, stock market updates, or news aggregation.

- Adapt to website changes by integrating machine learning models that detect layout modifications and automatically adjust scraping techniques.

Screen scraping vs. web scraping

Screen scraping and web scraping are both methods of extracting data from websites, but they differ in how they retrieve information.

Feature

Screen Scraping

Web Scraping

Data source

Graphical user interface (GUI)

HTML structure, APIs

Tools used

OCR, browser automation (Selenium)

Scrapy, BeautifulSoup, direct HTTP requests

Complexity

Higher—relies on UI changes

Lower—parses structured content

Efficiency

Slower—renders full web pages

Faster—directly extracts HTML elements

Use cases

Capturing dynamic content, legacy system integration

Collecting structured data, automating data pipelines

Screen scraping is the preferred method when data is visually displayed but not readily available in the website's HTML structure. This is often the case with dynamic elements such as JavaScript-generated content, embedded tables, or interactive charts that require a graphical interface for proper rendering.

It is also a practical approach when a website does not provide an API or other structured data sources, leaving screen scraping as the only viable option for extracting necessary information. Additionally, this method is essential for retrieving data from legacy applications and software interfaces that lack modern data access mechanisms. In situations where content is embedded in images or other rendered elements, Optical Character Recognition (OCR) is often required to convert visual text into machine-readable data.

Web scraping, on the other hand, is the preferred choice when data is structured and directly accessible within the website’s HTML, API, or XML feeds. Because web scraping extracts information from well-organized code, it is generally faster and more efficient, making it ideal for large-scale data collection. When an API is available, it provides a more reliable and cost-effective solution, reducing the need for complex data scraping techniques and minimizing the risk of encountering site changes that could disrupt data extraction.

Web scraping is also advantageous for avoiding reliance on GUI elements, which can frequently change and require continuous adjustments to scraping scripts. Since web scraping focuses on structured content, it is often the more stable and scalable approach for automated data retrieval.

Both methods have their place, and many data collection strategies use a combination of screen scraping and web scraping for a more comprehensive approach.

When to use one over the other

Use screen scraping tools when structured data isn’t available. Use web scraping when data can be extracted directly from HTML.

Advantages of screen scraping:

- Works when APIs are unavailable.

- Extracts human-visible data accurately.

- Bypasses restrictions on structured data access.

- Useful for legacy system integration where APIs do not exist.

- Can capture complex visual elements such as graphs and tables that are not exposed in HTML.

Limitations of screen scraping:

- Prone to UI changes, requiring frequent updates to scraping scripts.

- Lower efficiency compared to web scraping due to reliance on rendering.

- Potentially higher resource consumption, especially when using headless browsers.

- Risk of legal and ethical concerns if scraping violates web pages terms of service.

- More difficult to extract structured data compared to API-based or direct HTML scraping methods.

Deeper look into challenges & limitations of screen scraping

Screen scraping can be a powerful method for extracting on-screen data, but it faces various hurdles—ranging from technical barriers to maintaining responsible usage. Below are some common issues that can impact its overall effectiveness and reliability, along with possible strategies to address them.

Anti-scraping mechanisms

- CAPTCHAs, IP blocking, bot detection – Sites often use advanced detection, including fingerprinting and behavioral analysis, to distinguish automated bots from real users.

- JavaScript challenges and dynamically loaded content – Some web pages rely on complex scripts or incremental page updates, making it difficult for a simple scraper to capture all data accurately.

Solution: Employ rotating proxies, headless browsers, and CAPTCHA-solving services. Simulate human-like browsing by randomizing user agents, introducing realistic delays, and tracking mouse or keyboard behavior where applicable.

Responsible usage and access considerations

- Website policies and usage restrictions – Some websites have access policies or usage guidelines that discourage automated data extraction without approval.

- Handling personal data – Collecting or storing sensitive details requires caution to ensure user privacy and maintain trust.

Solution: Follow widely accepted “good neighbor” scraping practices, such as limiting request rates, avoiding sensitive data, and using official APIs when available. Engaging in open communication with site owners can also help establish acceptable scraping boundaries.

Data reliability issues

- Frequent UI changes – Even small adjustments to a webpage’s structure or layout can break existing scrapers and invalidate their results.

- Dynamic or script-based updates – If new content loads asynchronously via JavaScript, a scraper may miss crucial details unless specifically designed to account for them.

- Complex page elements – Sites with deeply nested HTML tags, encoded text, or unusual formatting can produce inconsistent or error-prone data.

Solution: Schedule regular maintenance to update and refine data scraping scripts. Use more adaptable approaches, such as machine learning–based scrapers, which can detect and respond to layout changes. Always validate extracted data (e.g., by cross-referencing multiple sources) to ensure consistency and accuracy.

Wrapping up

Screen scraping has emerged as an essential method for extracting data from web pages and applications that do not provide structured access through APIs. By capturing data from a website’s graphical user interface, this technique allows businesses and developers to access and analyze information that would otherwise be difficult to retrieve. Over time, screen scraping software has evolved from its origins in legacy systems to sophisticated automation-driven solutions capable of handling dynamic, JavaScript-heavy environments.

Given the increasing complexity of web environments, the use of proxies has become crucial in ensuring uninterrupted data extraction, so if you, too, are in need of help to bypass restrictions, maintain anonymity, and enable scalable scraping operations, consider the range of tools and solutions Smartproxy offers.

About the author

Dominykas Niaura

Technical Copywriter

Dominykas brings a unique blend of philosophical insight and technical expertise to his writing. Starting his career as a film critic and music industry copywriter, he's now an expert in making complex proxy and web scraping concepts accessible to everyone.

Connect with Dominykas via LinkedIn

All information on Smartproxy Blog is provided on an as is basis and for informational purposes only. We make no representation and disclaim all liability with respect to your use of any information contained on Smartproxy Blog or any third-party websites that may belinked therein.